AI APIs let teams add production-grade AI via HTTP: faster launch, scalable architecture, clearer privacy controls, and predictable costs—plus code.

Table of contents

- The real value of AI APIs

- How AI APIs fit into product & engineering

- Governance checklist: privacy, reliability, cost

- API example: Universal Background Removal (Node.js)

- AI APIs vs self-training models: how to decide

- Recommended reading (anchors)

The real value of AI APIs

When teams search “best ai avatar services for multilingual customer engagement” or “best ai avatar services for multilingual marketing campaigns,” they’re rarely buying a single “cool feature.” They’re buying a reliable delivery mechanism for AI: predictable integration, clear operating boundaries, and fast iteration.

AI APIs win because they turn AI into a standard software dependency:

1) Faster time-to-value

- No dataset collection, labeling, training cycles, model hosting, or GPU planning before you can launch.

- Your team can prototype and ship by integrating an endpoint with an API key.

2) More scalable architecture

AI APIs fit cleanly into common patterns:

- queue + workers

- retries with backoff

- rate limiting

- caching by input hash

- graceful fallbacks

This makes “AI features” behave like other production services.

3) Clearer privacy & compliance story

Enterprise buyers ask the same questions every time:

- What happens to uploads?

- How long are outputs retained?

- Is customer data used for training?

With AI APIs, you can document a repeatable policy (and enforce it in your gateway).

4) Predictable cost and ROI

Instead of budgeting GPUs + MLOps headcount, you align cost with usage:

- you can forecast by request volume

- you can cap by user plan / credits

- you can measure ROI per workflow or feature

How AI APIs fit into product & engineering

The most effective teams standardize AI APIs into a product deliverable and an engineering wrapper.

Product: define the deliverable (not “we integrated AI”)

Define what “done” means in output terms:

- formats (PNG/JPG), dimensions, variants

- failure behavior (fallback, retry, partial output)

- time budgets (p95 latency, queue timeout)

- traceability (job ID, audit logs)

This gives PMs clear acceptance criteria and helps engineering build stable contracts.

Engineering: use an “AI Gateway” pattern

Instead of calling providers from many services, create one internal layer:

- central API key management

- request validation + rate limits

- observability (latency, error codes, success rate)

- retries + circuit breaking

- output persistence (store results in your own S3/R2/OSS + serve via your CDN)

This is especially important if result URLs are temporary per provider policy. (For AILabTools specifics, reference the policy doc below.)

Governance checklist: privacy, reliability, cost

Use this as a copy-paste section for your PRD/tech spec.

Privacy & retention

- ✅ Document upload handling (stored vs not stored)

- ✅ Document output retention window

- ✅ Download-and-store outputs to your own storage if needed long-term

- ✅ Ensure customer data is not reused for training (state policy clearly)

Reliability

- ✅ Timeout defaults (e.g., 60s), plus retries with exponential backoff

- ✅ Queue for heavy workloads; cap per-user concurrency

- ✅ Fallback strategy (return original, show “try again”, or use last cached result)

- ✅ Incident readiness: error codes, request IDs, logs, dashboards

Cost controls

- ✅ Cache repeated inputs (hash → stored output URL)

- ✅ Deduplicate identical requests across sessions

- ✅ Enforce quotas (per IP / per user / per workspace)

- ✅ Track cost per workflow and cost per activated user

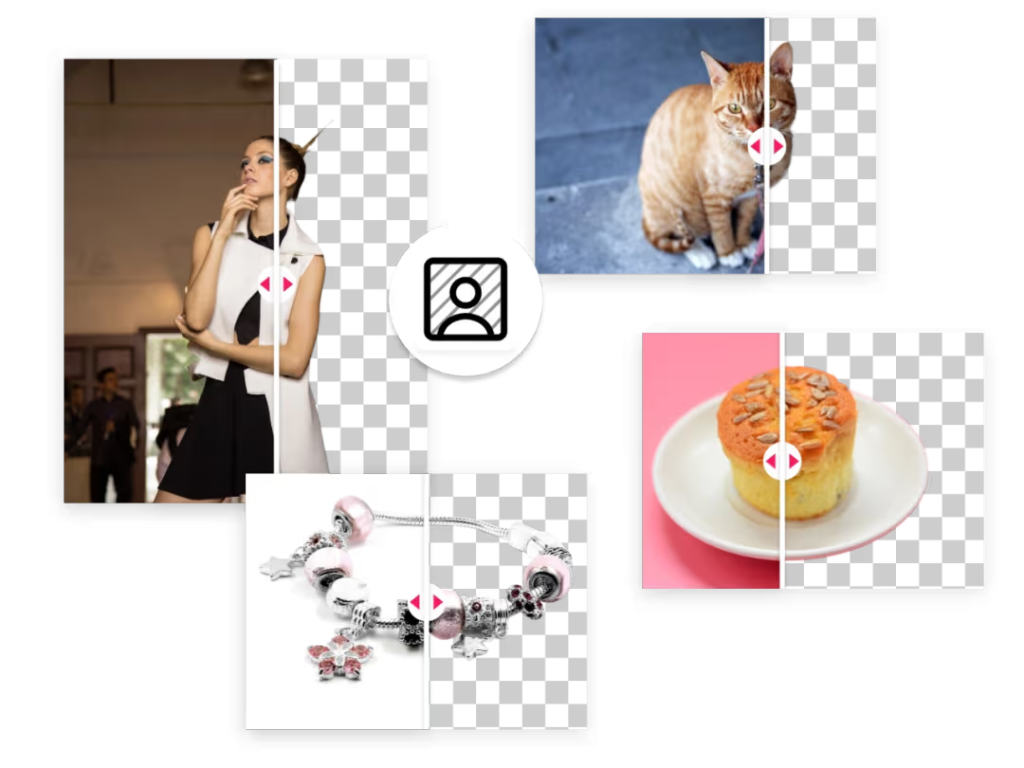

API example: Universal Background Removal (Node.js)

Below is a minimal integration example using Universal Background Removal—a good “first API” because it demonstrates the typical workflow: upload → process → get a result URL.

Use case examples

- UI-ready transparent PNG

- white background output for product images

- a mask output for downstream editing

Tip for production: treat provider result URLs as delivery URLs, not your long-term storage. Download and persist outputs to your own object storage + CDN.

cURL quick test

curl --request POST

--url https://www.ailabapi.com/api/cutout/general/universal-background-removal

--header 'Content-Type: multipart/form-data'

--header 'ailabapi-api-key: <api-key>'

--form image='@./input.jpg'

--form 'return_form=whiteBK'Common return_form values:

mask— best for compositing or further editswhiteBK— clean white background outputcrop— auto-crop tighter framing

Node.js (axios + form-data)

/**

* npm i axios form-data

* Node 18+

*/

import axios from "axios";

import FormData from "form-data";

import fs from "fs";

const API_KEY = process.env.AILAB_API_KEY;

export async function universalBackgroundRemoval(imagePath, returnForm = "whiteBK") {

const form = new FormData();

form.append("image", fs.createReadStream(imagePath));

form.append("return_form", returnForm); // mask | whiteBK | crop

const res = await axios.post(

"https://www.ailabapi.com/api/cutout/general/universal-background-removal",

form,

{

headers: {

...form.getHeaders(),

"ailabapi-api-key": API_KEY,

},

timeout: 60_000,

maxBodyLength: Infinity,

}

);

const j = res.data;

if (j?.error_code !== 0) {

throw new Error(`AILabTools error: ${j?.error_code} ${j?.error_msg || ""}`);

}

// j.data.image_url: persist to your own storage/CDN for long-term use

return j.data.image_url;

}

AI APIs vs self-training models: how to decide

Here’s the decision table PMs and engineering leads actually use:

| Dimension | AI APIs (integrate) | Self-training / self-hosting (build) |

|---|---|---|

| Time-to-market | Fast (days/weeks) | Slow (weeks/months) |

| Team requirement | Product + backend + infra | Adds ML + MLOps + GPU ops |

| Upfront cost | Low | High (data + compute + staffing) |

| Ongoing ops | Standard service ops | Model lifecycle + drift + deployment complexity |

| Iteration | Swap params/endpoints quickly | Longer research + retraining cycles |

| Best when | You need speed + predictability | You have proprietary data advantage or strict offline/private requirements |

A practical default strategy

- Start with AI APIs to validate value, UX, and economics.

- Only consider self-training once you hit a clear ceiling (quality, cost at scale, compliance constraints) and the business justifies long-term MLOps.

Recommended reading (anchors)

Use these as internal links (anchor text) in your post: